The study introduces a novel approach using PnP DFs that improves the image editing and generation process, giving creators greater control over their final product. However, complexities, ambiguity, and the need for custom content limit current rendering techniques.

Recent breakthroughs in generative AI are unlocking new approaches for developing powerful text-to-image models. candidate at the Weizmann Institute of Science. Our work is one of the first methods to provide users with control over the image layout,” said Narek Tumanyan, a lead author and Ph.D. However, the main challenge in applying them to real-world applications is the lack of user-controllability, which has been largely restricted to guiding the generation solely through input text. “Recent text-to-image generative models mark a new era in digital content creation. It could also strengthen industries reliant on animation, visual design, and image editing. The ability to edit and generate content reliably and with ease has the potential to expand creative possibilities for artists, designers, and creators.

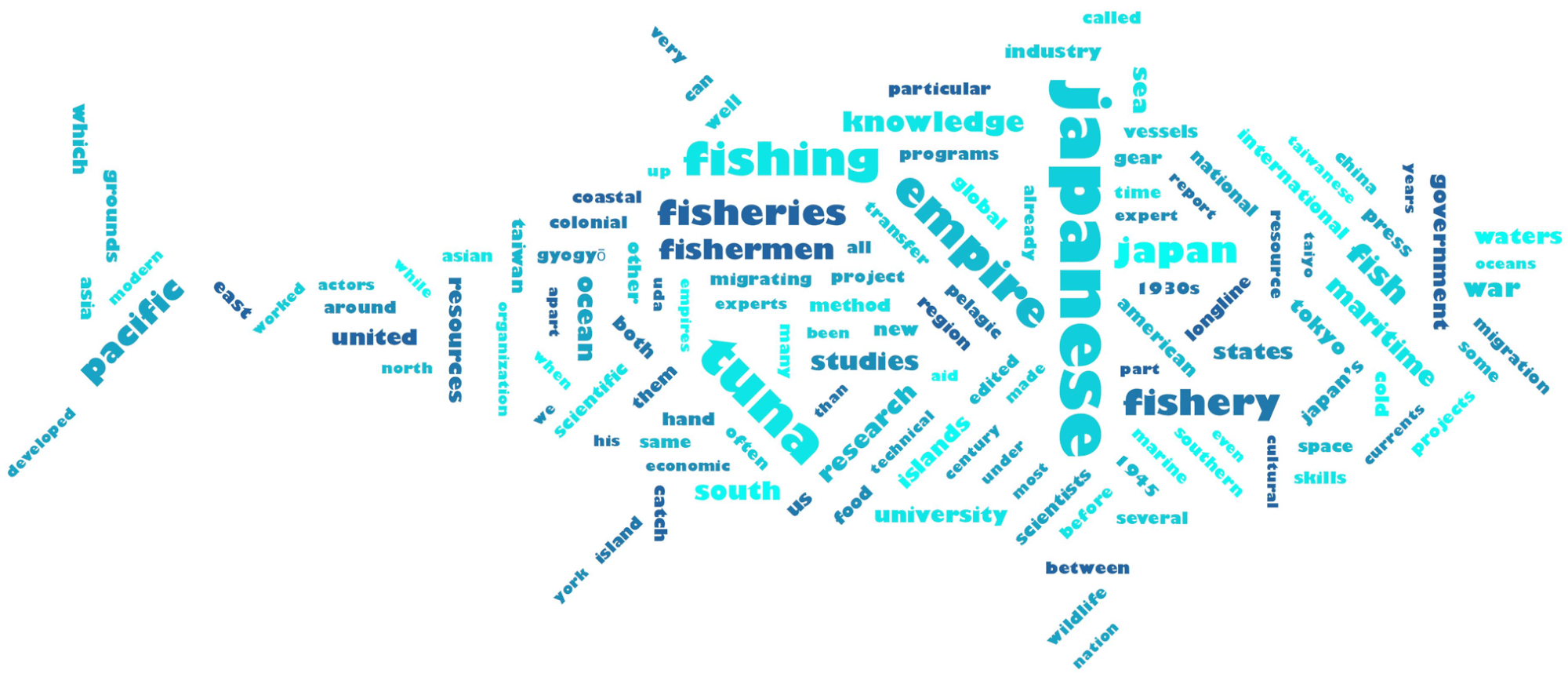

With this work, visual content creators can transform images into visuals with just a single prompt image and a few descriptive words. The innovative study presents a framework using plug-and-play diffusion features (PnP DFs) that guides realistic and precise image generation. New research is boosting the creative potential of generative AI with a text-guided image-editing tool.

0 kommentar(er)

0 kommentar(er)